| agent: | Auto Exec |

List my elasticsearch indices to give me an index pattern name I can search the logs for

Add credentials for various integrations

What is an "Expert"? How do we create our own expert?

Managing workspaces and access control

DagKnows Architecture Overview

Setting up SSO via Azure AD for Dagknows

Enable "Auto Exec" and "Send Execution Result to LLM" in "Adjust Settings" if desired

(Optionally) Add ubuntu user to docker group and refresh group membership

Deployment of an EKS Cluster with Worker Nodes in AWS

Adding, Deleting, Listing DagKnows Proxy credentials or key-value pairs

Comprehensive AWS Security and Compliance Evaluation Workflow (SOC2 Super Runbook)

AWS EKS Version Update 1.29 to 1.30 via terraform

Instruction to allow WinRM connection

MSP Usecase: User Onboarding Azure + M365

Post a message to a Slack channel

How to debug a kafka cluster and kafka topics?

Open VPN Troubleshooting (Powershell)

Execute a simple task on the proxy

Assign the proxy role to a user

Create roles to access credentials in proxy

Install OpenVPN client on Windows laptop

Setup Kubernetes kubectl and Minikube on Ubuntu 22.04 LTS

Install Prometheus and Grafana on the minikube cluster on EC2 instance in the monitoring namespace

update the EKS versions in different clusters

AI agent session 2024-09-12T09:36:14-07:00 by Sarang Dharmapurikar

Parse EDN content and give a JSON out

Check whether a user is there on Azure AD and if the user account status is enabled

Get the input parameters of a Jenkins pipeline

Get the console output of last Jenkins job build

Get last build status for a Jenkins job

Trigger a Jenkins job with param values

Give me steps to do health checks on a Linux Server

DagKnows Architecture Overview

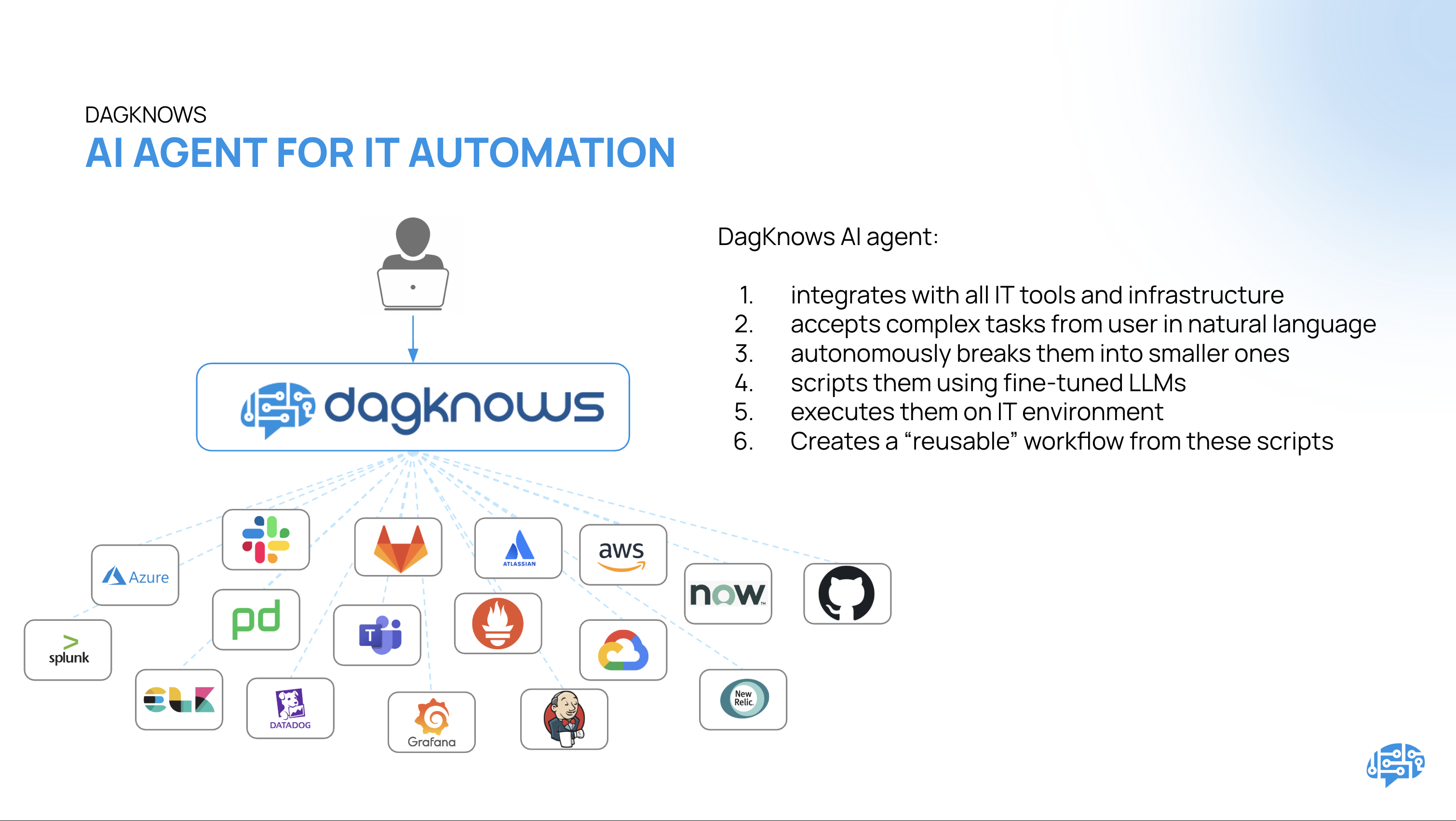

DagKnows is an Agentic AI platform for automation. With DagKnows, users can effortlessly automate various tasks by simply chatting with an AI agent in natural language. However, the AI Agent part is optional. If users prefer to build and manage automation manually, DagKnows makes that easy too. Let's discuss the AI Agent first.

As this figure shows, DagKnows integrates with all your favorite tools as well as your infrastructure (Cloud as well as on-prem). It provides a natural language layer to interact with all the tools and infrastructure. A user can just "chat" with DagKnows and ask it to perform various actions on the connected tools and infra and DagKnows takes care of it. Underneath, DagKnows makes LLM calls to turn user requests into code and executes the code on your infrastructure or tools via API calls, CLI commands etc.

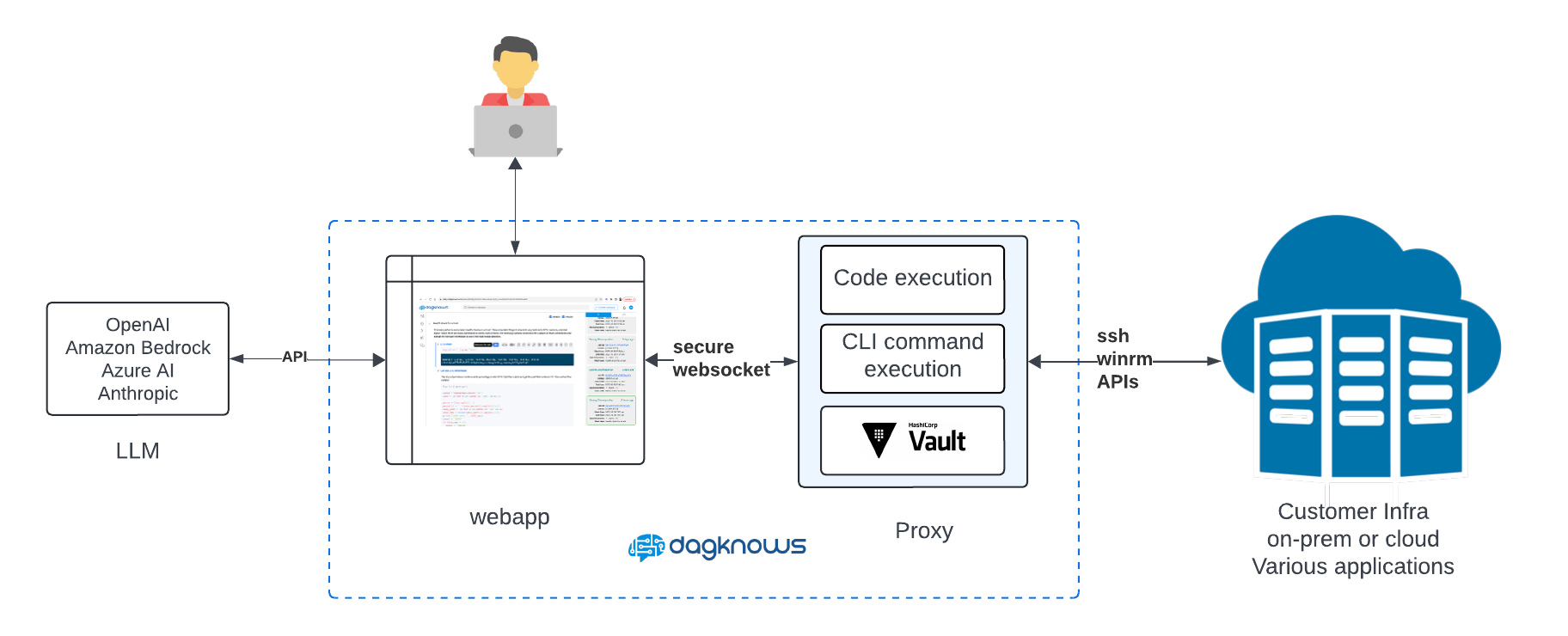

Here's a high level architecture of DagKnows. As the following figure shows, DagKnows has two main components.

- DagKnows webapp: This is the main controller with a UI. It manages and orchestrates users, automation tasks etc.

- DagKnows proxy: Think of it like a worker node. It actually executes the scripts and commands in the runbooks or workflows. While the figure shows only one proxy, in reality you can have multiple proxies. It is the proxy that connects to your environment and executes scripts/commands.

Here's a typical flow:

- User logs into the webapp and starts a conversation with AI agent. They can ask any question or specify actions etc. e.g. "List my ec2 instances with CPU utilization less than 10%"

- User prompt undergoes prompt engineering + RAG context attachment in our system

- The modified prompt is then sent to LLM. This can be any LLM you configure. We integrate with Amazon Bedrock, Azure AI, OpenAI, Anthropic and more. It can also be your own LLM on your premises.

- LLM responds with a code to execute or tool to use

- DagKnows web app puts together a script with the code or tool and sends it to the selected proxy to execute it on

- Proxy executes the script. In the script there may be calls to connect to tools or servers etc. Proxy will make the necessary calls and finally respond with the execution results.

- Execution results come to DagKnows webapp and it will send those to the LLM to ensure user's goal is achieved.

- If LLM thinks the goal is achieved, the results are displayed to the user in the webapp. If not achieved, LLM may respond with modified code or tool to retry.

- When the conversation with AI is over, user can optionally turn all the scripts generated in the process into a workflow or runbook by clicking a button.

- The workflow can then be executed as many times as you want, without any AI involvement. The workflows execution can be triggered via alerts or in a periodic schedule.

Thus, the beauty of DagKnows AI platform is that it enables users to build reusable workflows. AI interaction is needed only once -- while creating workflow. Once the workflow is ready, it can be deployed in multiple environments and there's no need to call LLMs.